Data Ethics in EdTech: Protecting Student Privacy and Data Rights

Data Minimization Principles

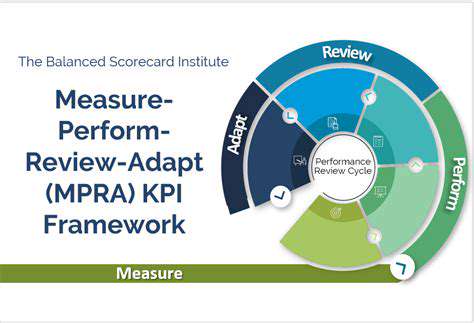

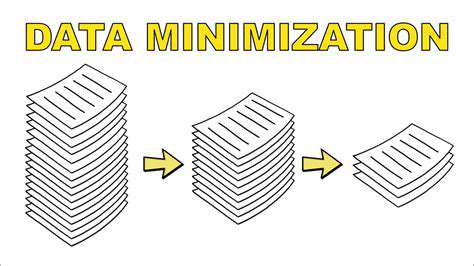

At its core, data minimization represents a critical safeguard in modern information systems. Rather than gathering extensive datasets indiscriminately, forward-thinking institutions now adopt a surgical approach to data collection. This selective methodology doesn't just satisfy regulatory requirements—it fundamentally transforms how organizations approach risk management. When institutions collect only essential information, they create natural barriers against potential data exploits while simultaneously respecting individual privacy boundaries.

The implementation process requires meticulous system analysis. Each data field must justify its existence by demonstrating clear operational necessity. This rigorous vetting process often reveals surprising opportunities to streamline operations while enhancing privacy protections. Many organizations discover they've been maintaining obsolete data streams that no longer serve their original purpose, representing both a liability and an unnecessary operational burden.

Purpose Limitation Requirements

Purpose limitation establishes crucial boundaries for data utilization. These parameters ensure that collected information serves only its intended functions, preventing mission creep in data applications. When educational technology platforms specify exact usage parameters during initial data collection, they establish transparent contracts with users that maintain trust throughout the data lifecycle.

Documenting these usage parameters serves multiple critical functions. First, it creates accountability structures within organizations. Second, it provides clear reference points for compliance audits. Most importantly, explicit purpose documentation gives data subjects tangible evidence of an organization's commitment to ethical data stewardship. This documentation becomes particularly valuable when explaining data practices to regulatory bodies or concerned stakeholders.

Practical Application and Benefits

Transitioning to minimized data frameworks requires both technological adjustments and cultural shifts within organizations. Teams must develop new protocols for data requests, implementing multiple verification layers before approving new collection initiatives. This deliberate approach naturally filters out unnecessary data demands while highlighting truly essential information needs.

The resulting ecosystem offers measurable advantages beyond regulatory compliance. Storage costs decrease significantly when organizations purge unnecessary data reserves. Processing efficiencies improve as systems handle leaner, more relevant datasets. Perhaps most significantly, these practices cultivate institutional reputations as privacy-conscious entities—a increasingly valuable differentiator in education technology markets. Parents and students demonstrate clear preferences for platforms that prioritize data responsibility.

Data Security and Protection: Safeguarding Sensitive Information

Data Minimization and Purpose Limitation

Strategic data collection practices form the foundation of effective security protocols. By limiting initial data intake, institutions automatically reduce their potential attack surfaces. This preventative approach contrasts sharply with traditional models that collect first and secure later. Modern frameworks recognize that the most secure data is the data never collected in the first place.

Data Integrity and Accuracy

Maintaining pristine data quality requires continuous oversight mechanisms. Institutions now implement automated validation systems that flag anomalies in real-time, preventing error propagation. These systems work alongside manual review processes conducted by trained data stewards. The combination creates multi-layered quality assurance that preserves dataset reliability throughout its lifecycle.

Data Security Controls and Measures

Contemporary security architectures employ defense-in-depth strategies. Encryption protocols now extend beyond simple data-at-rest protection to include sophisticated field-level encryption for particularly sensitive information. Access controls incorporate behavioral analytics that detect unusual patterns, while physical security measures leverage biometric verification for critical infrastructure access.

Data Subject Rights and Access

Modern systems prioritize self-service portals that empower users to manage their data directly. These interfaces provide transparent views of collected information while offering intuitive controls for updates or deletions. This shift from institutional control to shared stewardship represents a fundamental change in data relationship paradigms.

Secure Data Storage and Transmission

Encryption standards continue evolving to counter emerging threats. Current best practices involve implementing quantum-resistant algorithms alongside traditional encryption for future-proofing. Network security measures now routinely include packet-level inspection and AI-driven anomaly detection during data transmission.

Data Retention Policies and Disposal

Automated lifecycle management systems now handle retention schedules with precision. These systems trigger review processes as data approaches expiration dates, then execute secure deletion protocols that meet or exceed regulatory requirements for data destruction. Some institutions incorporate blockchain verification to provide immutable proof of proper disposal.

Data Breach Response and Recovery

Advanced incident response plans now incorporate predictive modeling that anticipates potential breach scenarios. These living documents receive regular updates based on emerging threat intelligence. Response teams train through simulated attacks that test both technical responses and communication protocols, ensuring preparedness for real-world incidents.

Read more about Data Ethics in EdTech: Protecting Student Privacy and Data Rights

Hot Recommendations

- Attribution Modeling in Google Analytics: Credit Where It's Due

- Understanding Statistical Significance in A/B Testing

- Future Proofing Your Brand in the Digital Landscape

- Measuring CTV Ad Performance: Key Metrics

- Negative Keywords: Preventing Wasted Ad Spend

- Building Local Citations: Essential for Local SEO

- Responsive Design for Mobile Devices: A Practical Guide

- Mobile First Web Design: Ensuring a Seamless User Experience

- Understanding Your Competitors' Digital Marketing Strategies

- Google Display Network: Reaching a Broader Audience