Gamification for Behavioral Change in Education

Defining Clear Learning Objectives

Before adding game elements, educators must clarify what students should know and be able to do. These objectives should be specific, measurable, and directly tied to curriculum standards. For example, rather than understand fractions, a strong objective might be students will accurately add and subtract fractions with unlike denominators in word problems.

This precision ensures game mechanics serve the learning rather than becoming distracting entertainment. Every badge, level, or point system should reinforce mastery of defined skills.

Choosing Appropriate Game Mechanics

Different learning goals call for different game elements. Progress bars and experience points work well for linear skill-building, while branching narratives suit exploratory learning. Leaderboards can motivate competitive students but may discourage those who struggle—in such cases, personal best trackers often prove more effective.

The key is matching mechanics to both learning objectives and student demographics. Primary students might enjoy colorful badges and character customization, while older learners often respond better to real-world impact metrics and skill mastery visualizations.

Creating Engaging and Rewarding Experiences

Effective educational games provide frequent, meaningful feedback. Rather than generic good job messages, specific praise like You used three persuasive techniques in your argument reinforces desired behaviors. Rewards should feel earned through genuine effort and progress.

Balancing challenge and achievability is crucial—tasks that are too easy bore students, while overly difficult ones frustrate. The ideal flow state occurs when challenge level slightly exceeds current ability, pushing learners to stretch their skills.

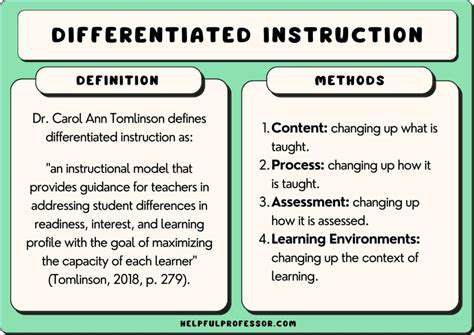

Designing for Different Learning Styles

Multimodal design ensures accessibility for diverse learners. Visual learners benefit from infographics and spatial puzzles, auditory learners from discussion-based challenges, and kinesthetic learners from simulations requiring physical interaction. Well-designed games incorporate multiple pathways to accommodate these differences.

For instance, a history game might allow students to demonstrate understanding through creating artwork, writing newspaper articles, or reenacting events—all within the same game framework.

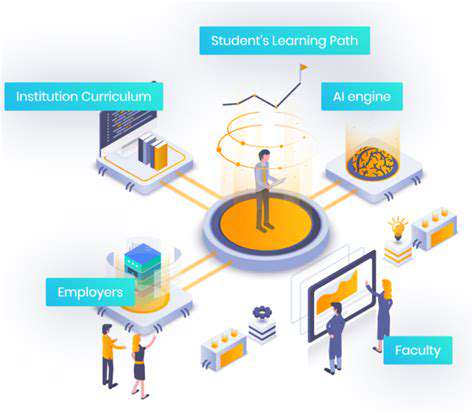

Implementing and Evaluating the Gamified Experience

Pilot testing reveals whether game elements work as intended. Observing students interact with the system shows where they get stuck or disengage. Analytics can track which features are used most and where students spend time, informing iterative improvements.

Effective evaluation compares learning outcomes between gamified and traditional approaches while also assessing qualitative factors like student enjoyment and perceived value.

Balancing Gamification with Learning

The most common pitfall is letting game elements overshadow educational substance. Points and badges should never become the focus—they're means to an end. Regular reflection helps maintain this balance: Are students talking more about the content or the rewards? Are they developing deeper understanding or just gaming the system?

Periodically stripping away game layers to assess core learning ensures the experience remains educationally valid. The best gamified learning feels like play while delivering substantive growth.

Beyond the Leaderboard: Measuring and Adapting Gamification Strategies

Beyond the Quantifiable: Unveiling the True Value

Traditional metrics like completion rates and test scores tell only part of the story. The most significant learning often happens in the struggles between checkpoints—the failed attempts, creative detours, and collaborative problem-solving that don't appear on leaderboards. Capturing these qualitative aspects requires intentional observation and reflective practices.

Educators might document aha moments during gameplay, collect student reflections on their learning processes, or analyze unexpected strategies learners develop. These insights often reveal deeper understanding than standardized metrics alone.

The Importance of Contextual Understanding

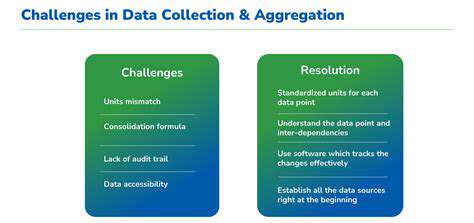

Performance data becomes meaningful only when interpreted in context. A student struggling with time management might show lower scores not due to ability but because they carefully consider each answer. Another might score well by guessing randomly rather than demonstrating true mastery.

Effective assessment triangulates multiple data points—quantitative metrics, observational notes, student self-assessments—to form a complete picture. This approach avoids unfairly labeling students based on superficial indicators.

Qualitative Data Collection and Analysis

Rich assessment incorporates various evidence types: video recordings of group problem-solving, learning journals documenting thought processes, peer feedback on collaborative projects. These qualitative sources reveal how students approach challenges, adapt strategies, and support each other's learning.

Thematic analysis of such data can identify patterns in student thinking and common stumbling blocks, informing targeted instructional adjustments. For instance, multiple students expressing similar misconceptions in reflections indicates a need to revisit that concept.

The Role of Feedback and Self-Reflection

Growth-oriented feedback focuses on specific behaviors rather than general praise or criticism. Instead of Good work, effective feedback might say, Your method of organizing the data helped identify the pattern—try applying that approach to the next challenge. This specificity helps students understand and replicate successful strategies.

Structured reflection prompts guide students to analyze their own learning processes: What strategy worked best for you? Where did you get stuck, and how did you overcome it? This metacognitive practice builds self-awareness and transferable learning skills.

Promoting a Holistic Approach to Evaluation

Truly comprehensive assessment values both products and processes, individual and collaborative work, speed and depth of understanding. It recognizes that different students demonstrate mastery in different ways and provides multiple avenues for showing growth.

Portfolio assessments that curate diverse work samples over time often provide the most complete picture of student development. When students select and defend their best work, they engage in valuable self-evaluation while demonstrating integrated understanding.