AI and Ethical Decision Making: Developing Moral Reasoning

Advanced techniques like adversarial debiasing and fairness constraints during training represent promising solutions, but require significant expertise to implement effectively. The complexity shouldn't deter efforts though - creating equitable AI demands this level of commitment to prevent automated systems from cementing societal inequalities.

Ensuring Accountability and AI Responsibility

As AI assumes greater decision-making roles, we must answer a fundamental question: who answers when things go wrong? Clear delineation of responsibilities among developers, implementers, and end-users forms the foundation of ethical deployment. Comprehensive legal frameworks must evolve in parallel, establishing protocols for addressing harms caused by AI systems while protecting against misuse. This combination of defined roles and legal safeguards creates the checks and balances necessary for responsible AI utilization.

Human Oversight as a Critical Safeguard

No matter how advanced AI becomes, human judgment remains irreplaceable. These systems should enhance human capabilities, not replace them entirely. Maintaining meaningful human control means preserving veto power over critical decisions and ensuring straightforward intervention protocols. Designing intuitive interfaces that facilitate this oversight represents a key challenge - the most ethical AI system fails if humans can't effectively monitor and guide its actions when necessary.

Defining and Integrating Moral Principles

Moral Foundations for AI Systems

Core human ethics - fairness, honesty, respect - must inform AI development not as rigid rules but as guiding principles. The goal isn't to create artificial moral agents, but systems whose outputs align with these values. Different philosophical traditions offer valuable perspectives: utilitarianism's focus on collective wellbeing, deontology's rule-based approach, and virtue ethics' emphasis on moral character all contribute to a nuanced understanding of ethical AI design.

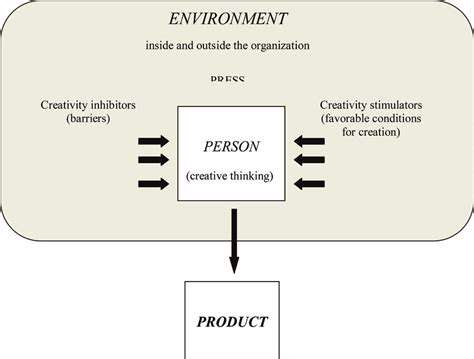

Operationalizing Ethics in AI Design

Embedding morality in AI extends far beyond programming ethical rulesets. It requires examining training data for hidden biases, ensuring decision processes remain interpretable, and establishing clear accountability structures. Developers must constantly ask: how will this system impact different groups? This holistic perspective considers not just immediate users but broader societal effects and environmental consequences.

The bias challenge deserves particular attention. Even with good intentions, AI can perpetuate discrimination if trained on flawed data. Combating this demands meticulous data curation, comprehensive testing protocols, and ongoing bias monitoring throughout a system's operational life.

Transparency as an Ethical Imperative

Trust in AI systems hinges on their explainability. When users understand how decisions are made, they can assess fairness and challenge questionable outputs. Achieving this requires translating complex algorithmic processes into understandable explanations while maintaining accuracy. Transparency serves dual purposes: building public trust while creating opportunities to identify and correct ethical shortcomings.

Accountability Structures for AI

Clear accountability mechanisms must define responsibility for AI actions across the development and deployment chain. This includes establishing oversight procedures, intervention protocols, and channels for addressing grievances. Equally important is planning for unintended consequences - ethical AI development anticipates potential failures and establishes response plans in advance.

Sector-Specific Ethical Challenges

AI's ethical implications vary dramatically across domains. Healthcare AI balances improved diagnostics against privacy concerns, financial algorithms raise questions about equal access, and law enforcement tools risk enabling surveillance overreach. Each sector requires tailored ethical guidelines addressing its unique risks and opportunities, developed through collaboration between technologists, ethicists, and domain experts.

Challenges and Future Directions in AI Ethics

Technological Hurdles in Ethical AI

Progress in ethical AI faces significant technical barriers. Developing reliable systems that integrate smoothly with existing infrastructure presents major engineering challenges, while implementation costs create adoption barriers, particularly for resource-limited organizations. These economic realities risk creating an AI ethics divide where only well-funded entities can afford responsible development practices.

Data security adds another layer of complexity. As AI systems process increasing amounts of sensitive information, robust encryption and access controls become non-negotiable for maintaining public trust. Addressing these technical challenges is essential for realizing AI's benefits while minimizing risks.

Societal Impacts and Public Trust

The societal implications of AI deployment require careful navigation. Ensuring equitable access and preventing algorithmic discrimination represents an ongoing challenge that demands continuous attention. Equally important is maintaining public understanding - transparent communication about AI capabilities and limitations helps build the social license necessary for widespread adoption.

Proactive engagement with diverse communities helps identify potential concerns before they become crises, while inclusive design processes can create systems that better serve all segments of society. This collaborative approach represents our best hope for aligning AI development with societal values.

Sustainable Funding for Ethical AI

Long-term investment in ethical AI research remains crucial but challenging. Developing funding models that support both innovation and responsibility requires balancing competing priorities. Public-private partnerships offer one promising approach, pooling resources while maintaining accountability standards. Such collaborations can accelerate progress while ensuring ethical considerations remain central to development efforts.

Evolving Ethical Frameworks

As AI capabilities advance, ethical guidelines must continually adapt. This means regularly reassessing standards in light of technological developments and shifting societal values. Proactive ethical analysis helps prevent problems rather than just reacting to them, creating a foundation for sustainable AI development that serves humanity's best interests across generations.

The Human Element: Collaboration and Education

Irreplaceable Human Judgment

Despite AI's impressive capabilities, human oversight remains essential at every stage. From initial design through ongoing operation, human judgment provides the nuanced understanding that algorithms lack. This oversight becomes particularly crucial when identifying subtle biases, interpreting complex situations, and making value-based determinations that resist quantification.

Synergistic Human-AI Collaboration

The most promising AI applications combine machine efficiency with human insight. While AI excels at processing vast datasets and identifying patterns, humans bring contextual understanding and creative problem-solving. Designing systems that leverage these complementary strengths requires thoughtful interface design and clear role definitions. The goal should be augmentation, not replacement - creating partnerships where humans and AI each contribute their unique capabilities.

Comprehensive AI Education

Building an ethical AI ecosystem demands education at multiple levels. Developers need training that goes beyond technical skills to include ethical reasoning and bias recognition. Users require awareness of AI's capabilities and limitations to make informed decisions about its use. Public education initiatives can foster more nuanced discussions about AI's role in society, helping shape policies that balance innovation with protection.

Dynamic Ethical Frameworks

Static ethical guidelines quickly become obsolete in AI's rapidly evolving landscape. Effective frameworks must incorporate mechanisms for regular review and updating, responding to both technological advances and societal changes. This includes developing assessment metrics that can evaluate an AI system's ethical performance across its lifecycle, from development through deployment and eventual decommissioning.

Continuous Ethical Monitoring

Responsible AI requires ongoing vigilance rather than one-time compliance checks. Regular audits should assess algorithm performance, identify emerging biases, and verify that systems continue operating as intended. This process must include diverse stakeholder perspectives, ensuring that potential impacts are evaluated from multiple angles. Such comprehensive monitoring represents our best defense against unintended consequences while enabling continuous improvement of AI systems.