Ethical AI Development in EdTech: Building Trust and Accountability

Fostering Inclusivity and Avoiding Bias in AI-Driven Learning Platforms

Ensuring Equitable Access to AI-Powered Learning

AI-driven learning platforms hold immense potential to revolutionize education, but equitable access is paramount. These platforms must be designed and implemented with a deep understanding of the diverse needs of learners, including those with disabilities, socioeconomic disadvantages, or varying cultural backgrounds. Failure to consider these factors can exacerbate existing inequalities, creating a digital divide and potentially hindering the very individuals these platforms aim to empower. Careful consideration must be given to accessibility features, language support, and culturally sensitive content to ensure that all learners can effectively engage with the platform.

Furthermore, the data used to train these AI systems should be representative of the diverse student populations they are intended to serve. Biased datasets can perpetuate existing societal biases and lead to discriminatory outcomes. This requires careful curation and validation of the data to ensure that it accurately reflects the diversity within the learning community. Continuous monitoring and evaluation of the platform's impact on different student groups are crucial for identifying and rectifying any biases that may emerge.

Mitigating Bias in Algorithm Design and Implementation

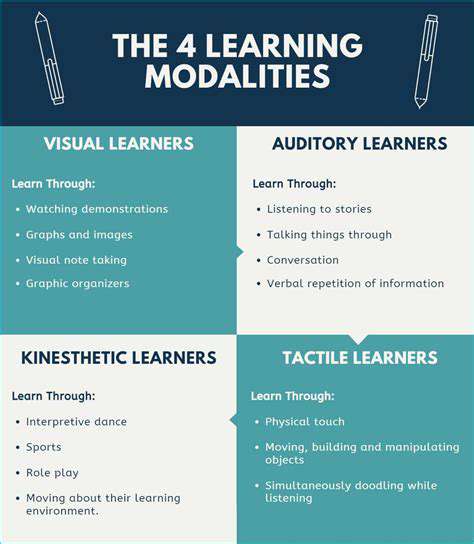

The algorithms underpinning AI-driven learning platforms can inadvertently perpetuate biases if not carefully designed and implemented. For instance, algorithms that prioritize certain learning styles or assessment methods might disproportionately disadvantage learners who don't conform to those norms. Transparency in algorithm design is essential to understanding how decisions are made and to identify potential biases. Clear documentation of the algorithms' decision-making processes, allowing for independent review and audit, is crucial to maintaining fairness and accountability.

Careful attention must be paid to the data used to train and refine these algorithms. Data that reflects existing societal biases can be amplified and reinforced by the algorithms, leading to unfair or inaccurate outcomes. The process of data collection, labeling, and preprocessing should be rigorously monitored to identify and mitigate these potential biases. Techniques like fairness-aware learning can be employed to develop algorithms that consider and address potential disparities.

Promoting Transparency and Explainability in AI Systems

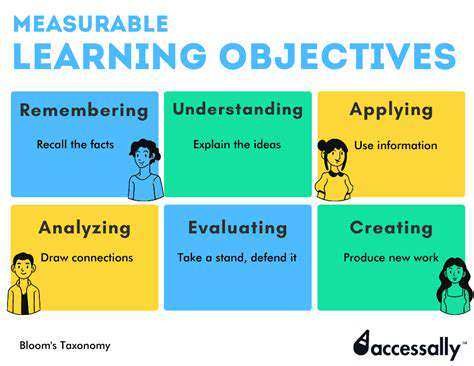

Understanding how AI-driven learning platforms arrive at their conclusions is crucial for building trust and ensuring accountability. A lack of transparency can lead to a sense of powerlessness and suspicion from learners and educators alike. Explainable AI (XAI) techniques can provide insights into the decision-making processes of these systems, allowing users to understand the reasoning behind recommendations, assessments, or personalized learning pathways. This fosters a deeper understanding of the platform's functionality and allows for potential errors or biases to be identified and corrected.

Cultivating a Culture of Inclusivity and Continuous Improvement

Building inclusive AI-driven learning platforms requires a multifaceted approach that goes beyond technical considerations. Creating a culture of inclusivity within the development and implementation teams is vital. Diverse perspectives and experiences are essential for recognizing and addressing potential biases in the system. Regular feedback mechanisms from learners, educators, and other stakeholders are paramount for identifying areas for improvement and ensuring continuous refinement of the platform. This collaborative approach ensures that the platform remains responsive to the evolving needs and expectations of the learning community.

Accountability and Ethical Frameworks for AI-Driven Learning Platforms

Establishing clear guidelines and ethical frameworks is critical for ensuring responsible AI development and implementation in the education sector. These frameworks should address issues of data privacy, security, and algorithmic fairness. Robust mechanisms for accountability and redress should be established to address any instances of bias or discrimination that may arise. Regular reviews and audits of the platform's functionality and impact are essential to ensure ongoing compliance with ethical standards. Clear communication channels for reporting concerns and grievances are crucial to fostering trust and encouraging users to voice their concerns.

Building Accountability Mechanisms for AI-Driven Educational Decisions

Establishing Clear Guidelines and Transparency

A crucial aspect of building accountability for AI-driven educational decisions is establishing clear and transparent guidelines. These guidelines should explicitly detail the criteria used by the AI systems, the types of data considered, and the potential biases inherent in the algorithms. Transparency ensures that educators, students, and parents understand how the AI system arrives at its conclusions and can challenge or question its recommendations if necessary, fostering trust and promoting ethical use.

Furthermore, clear communication channels must be established to facilitate feedback and address concerns regarding the AI's outputs. This includes avenues for reporting errors, biases, or inappropriate recommendations. Such transparency fosters a culture of accountability and allows for continuous improvement of the AI system by incorporating feedback from various stakeholders.

Defining Roles and Responsibilities

Establishing clear roles and responsibilities within the educational system is essential for holding someone accountable for the AI's outputs. This includes specifying who is responsible for monitoring the AI's performance, evaluating its impact on student learning, and addressing any potential issues or biases. Furthermore, defining roles clarifies the steps taken to rectify any errors or biases detected in the AI's decisions.

Ensuring Data Privacy and Security

Robust data privacy and security protocols are paramount when utilizing AI in education. The AI systems must adhere to stringent data protection regulations and ensure that student data is handled responsibly and ethically. This includes implementing measures to safeguard sensitive information, anonymizing data where possible, and obtaining explicit consent from students and parents for data collection and use.

Incorporating Human Oversight and Judgment

While AI can offer valuable insights and automate tasks, it's crucial to maintain human oversight and judgment in educational decision-making. Educators should not cede all decision-making authority to AI systems. Instead, AI should be used as a tool to support and augment human judgment, allowing educators to carefully consider the AI's recommendations in the context of each student's unique needs and circumstances. This ensures that human values and compassion remain central to the educational process.

Evaluating AI's Impact on Student Outcomes

Regular and comprehensive evaluation of the AI system's impact on student outcomes is critical for ensuring accountability. This includes tracking key metrics such as student performance, engagement, and well-being. Analyzing the data collected enables educators to identify areas where the AI system may be falling short or exacerbating existing inequalities. Regular assessments allow for adjustments to the AI system and its use to optimize outcomes and ensure equitable access to quality education for all students.

Developing Mechanisms for Addressing Bias and Fairness

Addressing potential biases embedded within the AI's algorithms is crucial for creating a fair and equitable educational experience for all students. This requires careful consideration of the data used to train the AI, as well as ongoing monitoring for biases in the AI's outputs. Furthermore, mechanisms for detecting and mitigating bias must be integrated into the system. This includes developing methods to identify and challenge potentially discriminatory outcomes, and implementing procedures for remediation when such biases are identified. Such proactive steps are essential to ensuring that the AI system does not perpetuate existing inequalities.

Read more about Ethical AI Development in EdTech: Building Trust and Accountability

Hot Recommendations

- Attribution Modeling in Google Analytics: Credit Where It's Due

- Understanding Statistical Significance in A/B Testing

- Future Proofing Your Brand in the Digital Landscape

- Measuring CTV Ad Performance: Key Metrics

- Negative Keywords: Preventing Wasted Ad Spend

- Building Local Citations: Essential for Local SEO

- Responsive Design for Mobile Devices: A Practical Guide

- Mobile First Web Design: Ensuring a Seamless User Experience

- Understanding Your Competitors' Digital Marketing Strategies

- Google Display Network: Reaching a Broader Audience