Ethical AI in Education: Addressing Bias and Ensuring Fairness

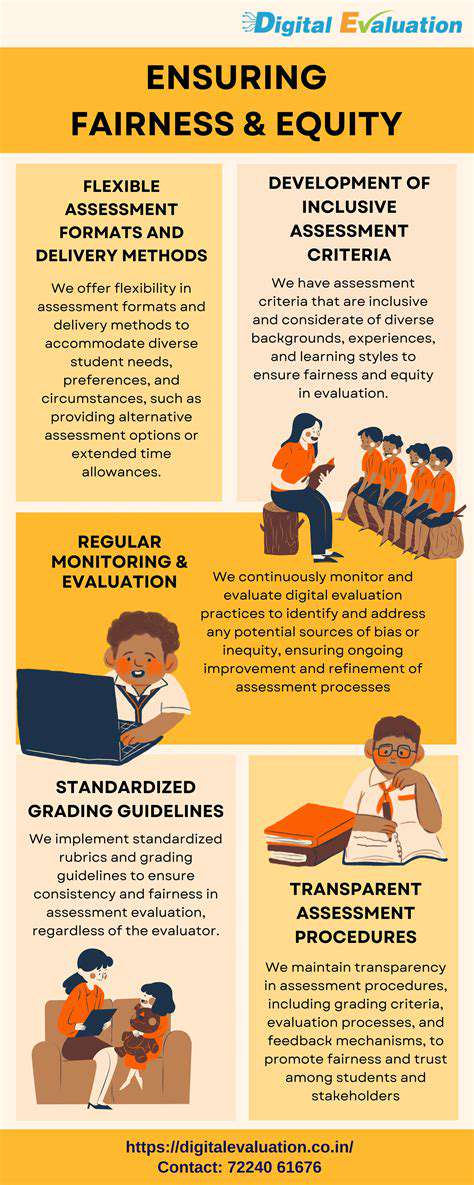

Ensuring Fairness and Equity in AI-Driven Assessments

Defining Fairness and Equity in AI

When we talk about fairness and equity in artificial intelligence systems, we're addressing foundational ethical requirements for responsible technological development. These principles extend far beyond basic anti-bias measures, demanding a comprehensive grasp of how automated systems might reinforce or worsen pre-existing social disparities. A truly fair AI system must provide equitable treatment to all users, while equity recognizes that diverse groups may need customized approaches to reach equivalent results. This crucial differentiation forms the bedrock of ethical AI implementation.

The path to understanding these concepts requires acknowledging the multiple bias types that can infiltrate machine learning models. These distortions may originate from training datasets, algorithmic architecture, or even unconscious developer prejudices. Eliminating these biases becomes essential to prevent AI from magnifying current social inequities.

Data Bias and its Impact

Machine learning models learn from extensive datasets that, when reflecting societal prejudices, inevitably reproduce them in system outputs. Consider facial analysis software trained predominantly on Caucasian features - it frequently struggles with accurate identification of darker-skinned individuals. Such performance gaps directly result from insufficient diversity in training materials. Detecting and correcting these data biases represents the first critical step toward equitable artificial intelligence.

The methodology of data gathering itself can introduce distortions. Information collected from limited demographic pools or specific regions often fails to represent broader populations, yielding skewed algorithmic behavior. This reality highlights the vital need for comprehensive data diversity to prevent biased outcomes.

Algorithmic Bias in AI Models

Even with perfectly balanced training data, the mathematical frameworks powering AI can create their own distortions. Certain algorithm designs might unintentionally advantage particular groups or produce lopsided results through their structure. These computational biases may appear as prejudiced predictions, unequal outcome distributions, or reinforced stereotypes. Neutralizing such effects demands thorough analysis of the mathematical foundations and their potential for discrimination amplification.

Addressing Bias in AI Development Processes

Eliminating prejudice requires anticipatory measures across the entire development pipeline. This encompasses meticulous data preparation, thoughtful algorithm selection, comprehensive model testing, and continuous performance tracking. Engineering teams must implement rigorous bias checks at each development phase to proactively detect and eliminate potential discrimination vectors. Incorporating diverse viewpoints throughout this process proves equally essential.

Ethical Frameworks for AI Development

Establishing clear ethical standards for artificial intelligence represents a fundamental requirement for equitable systems. These guidelines should encompass data protection protocols, algorithmic explainability requirements, accountability structures, and societal impact assessments. Well-constructed ethical frameworks give developers the tools to navigate complex moral challenges inherent in AI deployment.

Monitoring and Evaluation of AI Systems

Continuous fairness auditing remains critical for operational AI implementations. Regular assessments can detect emerging biases and verify ongoing alignment with ethical standards. Persistent oversight guarantees that system performance doesn't accidentally reinforce existing prejudices. Effective implementations also incorporate user feedback channels for reporting potential issues.

Promoting Diversity and Inclusion in AI

Building heterogeneous development teams represents a crucial strategy for creating genuinely equitable systems. Teams blending varied backgrounds and perspectives gain broader insight into potential discrimination risks while developing more resilient solutions. Cultivating workforce diversity serves not only ethical imperatives but also drives technical innovation through expanded creative approaches. This necessitates active outreach to historically underrepresented talent pools.

Promoting Inclusivity Through Personalized Learning Pathways

Personalized Learning for Diverse Needs

Customized educational trajectories prove essential for creating inclusive learning environments that accommodate individual cognitive styles, progress rates, and academic requirements. This methodology accepts that learners process information differently and progress at varying speeds. By adapting teaching methods and evaluation criteria to personal needs, instructors establish more supportive classrooms for all students, regardless of background or ability level. This approach particularly benefits neurodivergent learners, culturally diverse students, and those with alternative learning preferences.

Effective personalized learning implementation demands careful analysis of student requirements, employing multiple assessment techniques like continuous formative evaluations and regular progress reviews. This enables ongoing academic monitoring and curriculum adjustments when necessary. Such tailored methods frequently lead to measurable improvements in student achievement and content engagement.

Accessibility and Equity in Educational Technology

Guaranteeing universal access to digital learning tools forms a cornerstone of inclusive education. This requires designing platforms usable by students with various physical limitations and economic circumstances, incorporating features like screen reader optimization, descriptive image annotations, and customizable text displays. Comprehensive accessibility standards help equalize educational opportunities across diverse learner populations.

Culturally Responsive Pedagogy

Instructional methods acknowledging student diversity create more welcoming learning environments. This approach values the varied cultural backgrounds and life experiences of learners, adapting teaching content and techniques to reflect classroom diversity. Recognizing students' cultural identities helps build inclusive classrooms where all participants feel respected.

Implementation includes integrating culturally relevant examples and reference materials to boost engagement. Presenting multiple cultural perspectives within curricula helps students develop nuanced worldviews and appreciation for global diversity.

Addressing Learning Differences with AI-Powered Tools

Intelligent educational technologies offer valuable assistance in identifying and accommodating learning variations. By processing student performance metrics, these systems can detect patterns and suggest customized instructional adjustments. This capability helps teachers better understand individual requirements and modify their approaches accordingly. Machine learning can also personalize educational resources, delivering materials matched to specific learning preferences.

AI in Personalized Feedback and Support

Artificial intelligence significantly enhances customized academic support systems. Smart tutoring platforms provide real-time performance analysis, pinpointing areas needing reinforcement. This immediate feedback mechanism allows self-paced learning with targeted skill development. Additionally, AI assistants offer round-the-clock academic support, answering questions outside traditional classroom hours.

Ethical Considerations in Data Collection and Use

AI-enhanced education introduces critical data ethics concerns. Institutions must implement rigorous data protection protocols, complying with privacy laws and security standards. Clear policies governing educational data usage are essential to prevent misuse and ensure exclusive application toward learning improvement. Transparent communication with families about data practices helps maintain trust and ethical integrity.

Read more about Ethical AI in Education: Addressing Bias and Ensuring Fairness

Hot Recommendations

- Attribution Modeling in Google Analytics: Credit Where It's Due

- Understanding Statistical Significance in A/B Testing

- Future Proofing Your Brand in the Digital Landscape

- Measuring CTV Ad Performance: Key Metrics

- Negative Keywords: Preventing Wasted Ad Spend

- Building Local Citations: Essential for Local SEO

- Responsive Design for Mobile Devices: A Practical Guide

- Mobile First Web Design: Ensuring a Seamless User Experience

- Understanding Your Competitors' Digital Marketing Strategies

- Google Display Network: Reaching a Broader Audience